Releases

We released cardano-node-8.9.1, and we tagged a soon to be released

cardano-node-8.9.2

The 8.9.2 release will have a fixed peer sharing support, which we

incidentally broke in 8.9.1. We expanded our test suite to cover discovered

bugs (see below for more details). Please also see the release

tab in our project to see which PRs / issues were

included in a given release, the following mapping might also be useful:

ouroboros-network-0.13.1.0 -> cardano-node-8.9.1ouroboros-entwork-0.14.0.0 -> cardano-node-8.9.2

Genesis

We continued working on network Genesis support:

- ouroboros-network#3396 - churn policy for Genesis;

- ouroboros-network#4813 - outbound governor support for Genesis;

- support for

cardano-cli to write a big ledger peers snapshot to disk and

for cardano-node to pass it to ouroboros-network.

As well as a feature required by consensus:

TxSubmission

Only a little progress was made due to one of us being on vacation.

Churn and EKG metrics

While working on ouroboros-network#4815, we addressed technical debt

in churn. The PR removes implicit synchronisation (in terms of delays) in

favour of explicit synchronisation with the outbound governor. The PR extends

EKG counters traced by the node. See below for some graphs.

Documentation

We updated the documentation on peer sharing, see

cardano-node-wiki#44.

Low level details

Peer Sharing Testing

We wrote a testing scenario for peer sharing, which simulates a node setup:

A -> B <- C, where neither of the nodes B and C have any local roots; they only learn about other nodes through (light) peer sharing. B learns

about A and C because they connect to it, while C should learn about A

through peer sharing. This test scenario should prevent us from breaking peer sharing in the

future in some obvious ways. In the future, we will also work on extending our

test suite with peer sharing in mind. See ouroboros-network#4839,

ouroboros-network#4841.

EKG / Prometheus Counters

Note that this is in progress, so some things might still change.

We will provide counters for active (also known as hot) peers,

established (e.g. hot & warm) peers and known (e.g. hot, warm and

cold) peers. This is the same way one specifies targets in the node's

configuration. In addition, the three groups are split into five categories:

- ledger peers

- big ledger peers

- local root peers

- bootstrap peers

- shared peers

In addition, we also provide a counter for root peers, which counts ledger peers,

big ledger peers, local roots and bootstrap peers, which correspond to the

root peers target TargetNumberOfRootPeers in the configuration.

We also provide counters for ongoing promotions and demotions.

Ledger peers are affected by TargetNumberOf{Known,Established,Active}Peers and TargetNumberOfRootPeers.

The gap between TargetNumberOfRootPeers and TargetNumberOfKnownPeers will

be filled either with ledger peers or peers, which the node discovered through

peer sharing.

Big ledger peers are affected by TargetNumberOf{Known,Established,Active}BigLedgerPeers.

Below are some Grafana graphs from an experimental cardano-node branch:

Deprecation policy

The previous hot, warm / cold EKG counters will also be available,

although deprecated after the new ones are released. Sometime in the future

they will be removed.

Grafana graphs

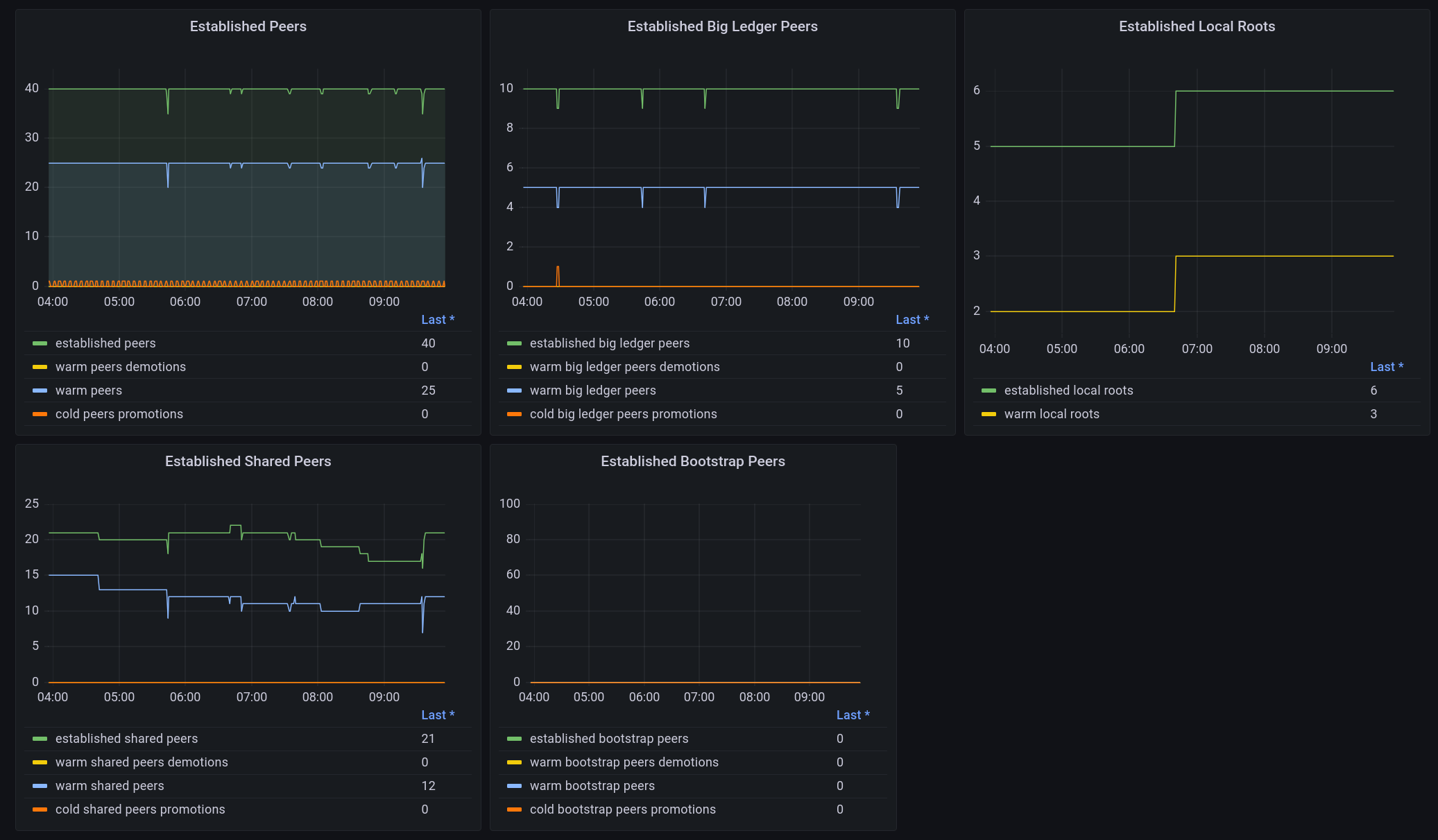

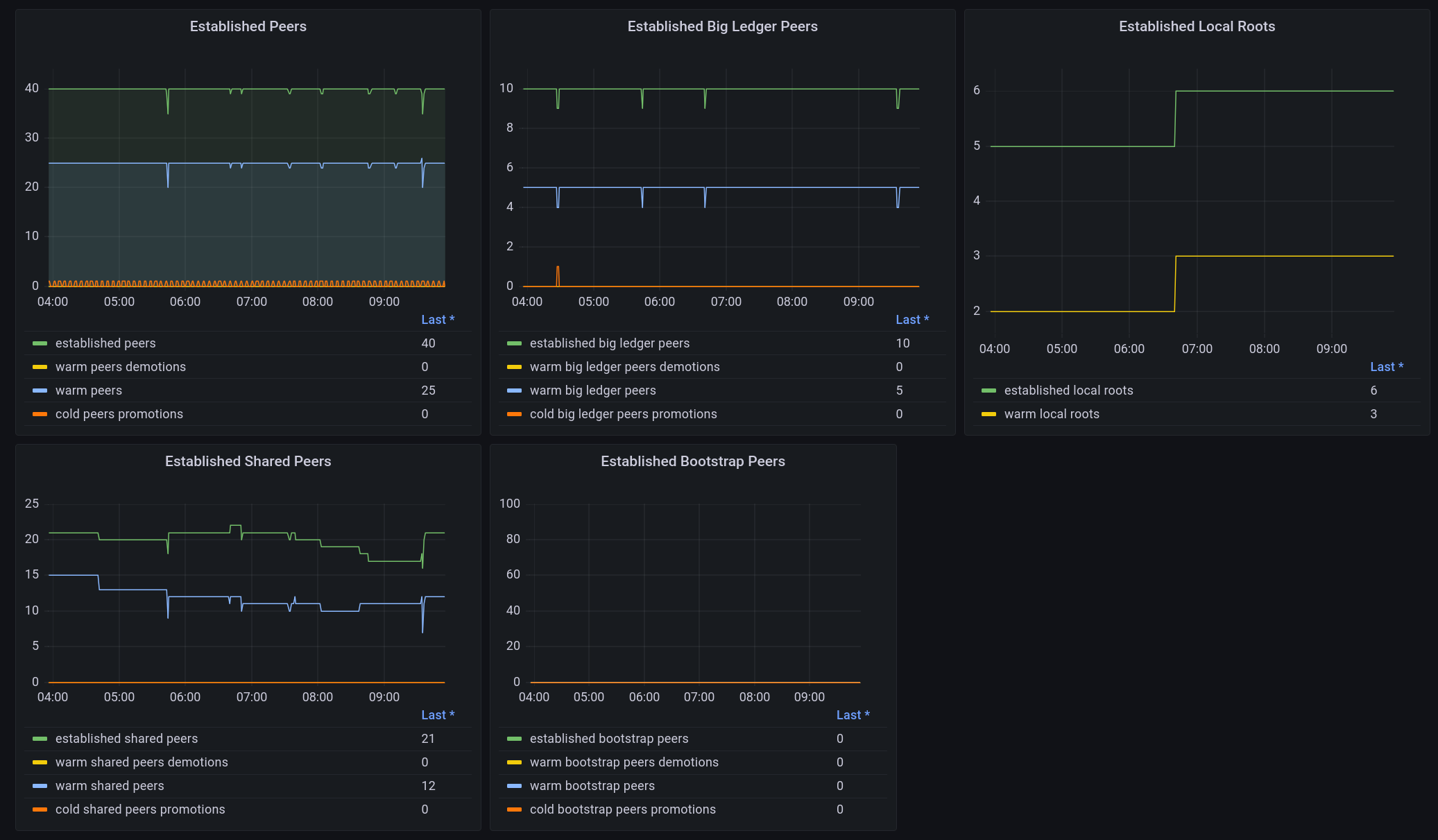

The screenshots are from a node, which is configured without any bootstrap

peers, which is why all bootstrap metrics are 0. The node is configured with the following targets:

"TargetNumberOfRootPeers": 60,

"TargetNumberOfKnownPeers": 100,

"TargetNumberOfEstablishedPeers": 40,

"TargetNumberOfActivePeers": 15,

"TargetNumberOfKnownBigLedgerPeers": 15,

"TargetNumberOfEstablishedBigLedgerPeers": 10,

"TargetNumberOfActiveBigLedgerPeers": 5,

and has a small number of local root peers and one peer in its publicRoots

configuration.

Active Peers Metrics

Established Peers Metrics

Known Peers Metrics

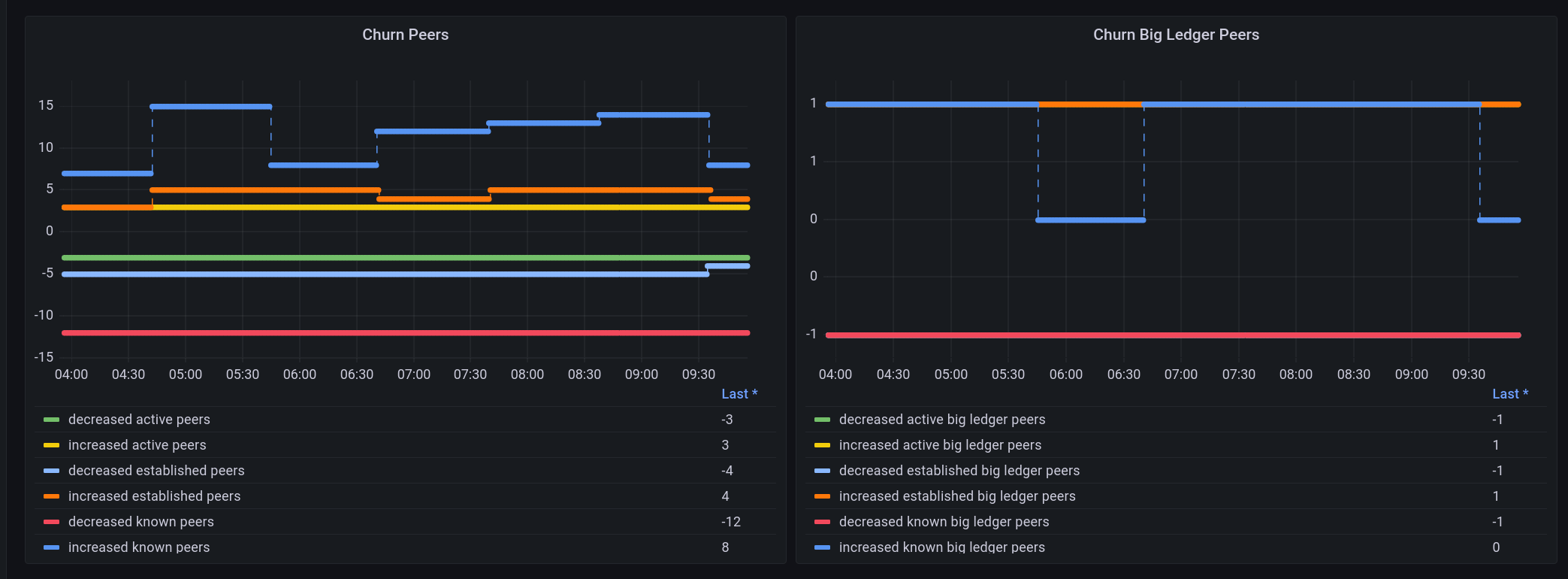

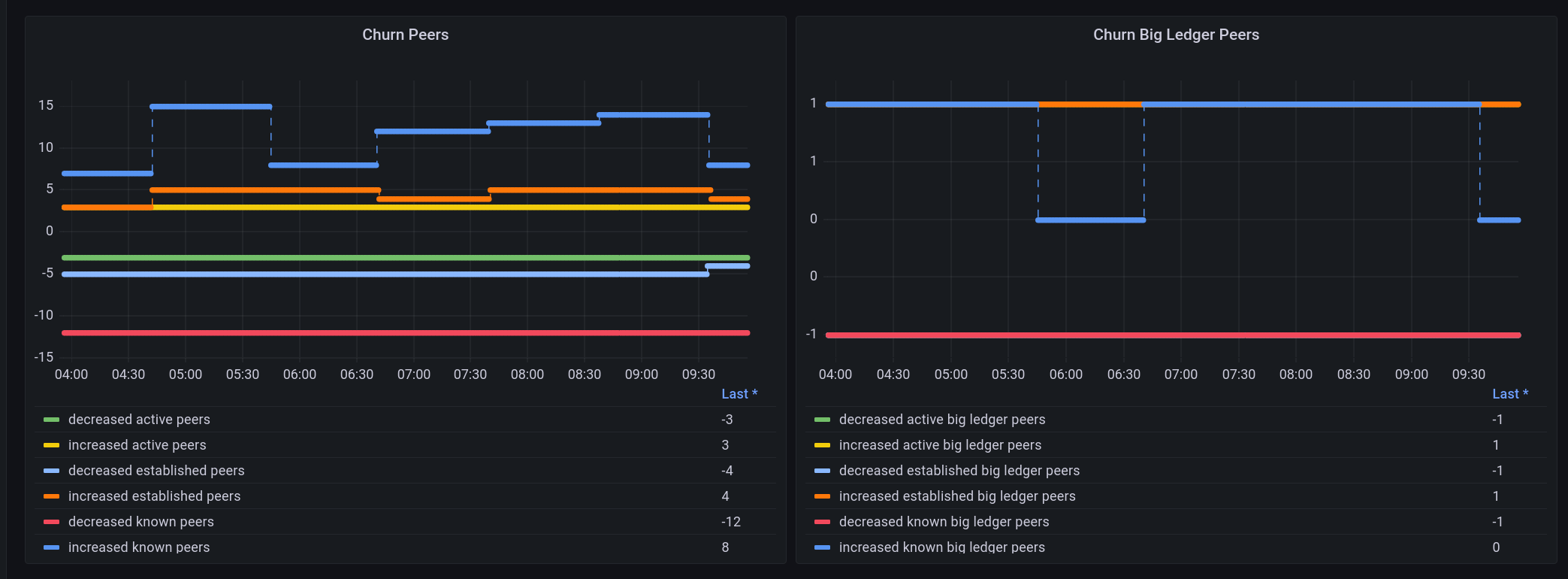

Churn Metrics